Doubling over Time: Understanding Moore’s Law

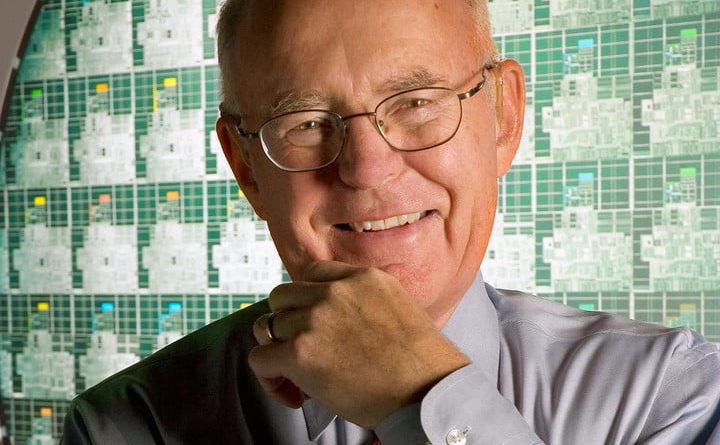

“Moore’s law” is a near-constant reference in contemporary discussions of innovation, technological change, and the information age. This decades-old observation by Intel Corporation’s cofounder Gordon E. Moore that the power of computer chips doubles every 24 months or so is more than just shorthand for rapid technological change; it is an insight into the dynamics of a particular technology—silicon microelectronics.

Although its demise has been predicted numerous times, it’s 2017 and the law still holds, albeit more slowly

But what exactly is Moore’s law? Is it a prediction, an observation, or something else? Is it a law for innovation, like the ideal gas law in chemistry? How widely does it apply? How did Moore come up with it?

The context in which Moore first formulated his law was miniaturization: the semiconductor community’s experience with it, and its potential in the rapidly emerging technology of integrated circuits in the 1960s.

Miniaturization had been the semiconductor industry’s watchword since the mid-1950s. Just as the small size of the earliest transistors made them preferable to vacuum tubes, miniaturization was a key advantage that successive generations of silicon transistors had over one another. Transistors were fabricated in a batch process that created multiple transistors on a single wafer of semiconductor crystal, using photolithographic, mechanical, and chemical processing steps. Smaller transistors were cheaper to produce because more of them fit on a single wafer; they also operated faster. These better, cheaper discrete transistors opened up new applications and markets for semiconductor electronics throughout the 1950s.

Many of these new applications required large, complex circuits, containing many discrete transistors, along with other components like diodes, capacitors, and resistors. However, the complexity of these circuits—the large number of components and their interconnections—imperiled their reliability. A solution to this problem was to create a complete circuit from a single piece of semiconductor material, with different regions acting as different components. In 1958 Jack Kilby of Texas Instruments succeeded in making the “monolithic” concept a reality by creating the first integrated circuit; however, his creation was unsuitable for mass production.

The same factors that had made miniaturization so important in the development of discrete transistors—enhanced performance and reduced cost—were quickly recognized by the semiconductor community as fundamental to the new technology of integrated circuits. In 1961, Fairchild Semiconductor, co-founded by Gordon Moore, brought to market the planar integrated circuit, the first manufacturable realization of the monolithic ideal. It was based on the firm’s new planar process, which utilized a layer of silicon dioxide to form, protect, and stabilize the integrated circuit. The semiconductor industry quickly gravitated toward Fairchild Semiconductor’s breakthrough silicon planar integrated circuits. Thus, the age of the microchip was born.

From 1960 to 1964 the market for silicon integrated circuits expanded markedly, almost exclusively on the basis of military sales. Prices for integrated circuits were still above what many systems producers were accustomed to paying for electronic components: the technology was new, the volumes were relatively low as compared to discrete devices, and the integrated circuits were most frequently built to stringent military specifications for performance and reliability. Moreover, integrated circuits required potential customers to adopt a new mode for evaluating the cost of electronic components, one that encompassed the costs associated with the equivalent discrete components and the labor to interconnect them into a complete circuit.

Despite these limits, by 1964 many leaders in the semiconductor community had become convinced that integrated circuits were the future of electronics. History proved them correct, but the outcome was far from preordained. These industry leaders sought outlets for presenting their opinions. For example, in the first half of 1964, the Institute of Electrical and Electronics Engineers (IEEE) held its annual international convention in New York City. The convention gathered semiconductor industry leaders for a special session to promote integrated circuits and their potential to the technical professionals who would become their makers and users.

Gordon Moore, from his vantage as director of Fairchild’s R&D lab, was among the semiconductor leaders convinced of the promise of integrated circuits. His chance to promote integrated circuits came on 28 January 1965, when Lewis Young, the editor of the widely circulated trade journal Electronics, wrote inviting Moore to contribute to the magazine’s 30th anniversary issue. “[W]e are asking a half-dozen outstanding people to predict what is going to happen in their field of industry.

Because of the innovations you have made in microelectronics and your close interest in this activity we ask you to write your opinion of the ‘future for microelectronics’ . . . I think you might have fun doing this and I am sure the 65,000 readers of Electronics will find your comments stimulating and provocative.” Young’s offer provided Moore with an excellent opportunity to convey his vision of the future for a technology that his firm had pioneered and a market in which it was a strong leader. However, the offer had a notable drawback: Moore would have only one month to complete the article.

Over the next 21 days, Moore considered Young’s challenge and drafted his response. In his manuscript, Moore articulated his visions by following his preference for analysis based on numbers. He seized on two metrics: one capturing the trajectory of the technology of integrated circuit manufacture and the other capturing the trajectory of the associated economics. Moore observed that in the past the dynamic between these two metrics had led to an exponential rise in the technical might of integrated circuits along with an exponential drop in the cost to manufacture them. Looking forward, he could see no factor—either technological or economic—threatening the existing dynamic and made the prediction that the exponential change would continue apace for the next decade.

In the cover letter accompanying his manuscript, Moore suggested a change to Young’s assignment: “Enclosed is the manuscript for the article entitled ‘The Future of Integrated Electronics’ . . . I am taking the liberty of changing the title slightly from the one you suggested, since I think that ‘integrated electronics’ better describes the source for the advantages in this new technology than does the term ‘microelectronics.’”

Just over one month later, the edited version of Moore’s paper appeared in the 19 April 1965 issue of Electronics, constituting the first public articulation of what came to be known as “Moore’s law.”